Exploring GPT3 - GPT3 for Data Labeling

How to use GPT3 model for data labeling (or data annotation)?

🍧Blog post 1 - How to use GPT3 for data labeling?

🍧Blog post 2 - How to further improve the quality of GPT3 labeled data?

🌴Data Annotation

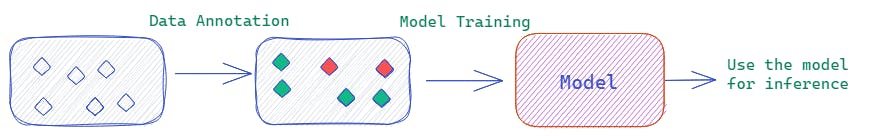

Data Annotation (also known as Data Labeling) is the first step in building any machine learning or deep learning-based system. Data Annotation involves assigning labels to the unlabeled instances i.e., data annotation involves developing labeled datasets. Once the dataset is labeled, we can use this labeled dataset to train the machine learning or deep learning models so that they can be used for inference i.e., to get the predictions for the new inputs.

We have three different approaches for data annotation namely

Human Labeling - This approach involves hiring and training domain experts to assign labels. This approach is more accurate to label the instances. However, it takes more time to hire and train the domain experts i.e., it is consuming. Additionally, it is expensive too.

Automatic Labeling - Models like GPT3 achieved very strong performances in zero and few-shot NLP tasks. After the success of the GPT3 model in low-resource (zero and few-shot) NLP tasks, NLP researchers focused on leveraging the GPT3 model as a cheap alternative to human labeling. Automatic labeling using GPT3 is much comparatively faster and cheaper too.

Human in the loop - This approach is a mix of human and automatic labeling approaches i.e., it combines the advantages of both approaches.

Now, you are going to see how to use the GPT3 model for data annotation

🌴GPT3 as a Data Annotator

After the success of the GPT1 [1] and GPT2 [2] models, Open AI developed GPT3 [3]. GPT3 is a large pre-trained model developed by Open AI and it has around 175B parameters. Due to its large size of 175B parameters and pretraining on large volumes of data, the model has gained a lot of knowledge. This knowledge allows the model to do well in zero and few-shot NLP tasks even without any fine-tuning. As human data labeling is an expensive and slow process, the success of the GPT3 model has made NLP researchers use it as a cheap yet quality data annotator. Now, let us see the different methods to use GPT3 for data labeling.

🌴Different approaches to using GPT3 as a Data Annotator

There are three different approaches to using GPT3 as a data annotator [4] namely

Prompt-Guided Unlabeled Data Annotation (PGDA)

Prompt Guided Training Data Generation (PGDG)

Dictionary Assisted Training Data Generation (DADG)

Any approach to using GPT3 as a data annotator involves creating a prompt. These three approaches differ in how the prompt is generated. Let us see each of these approaches in a detailed way.

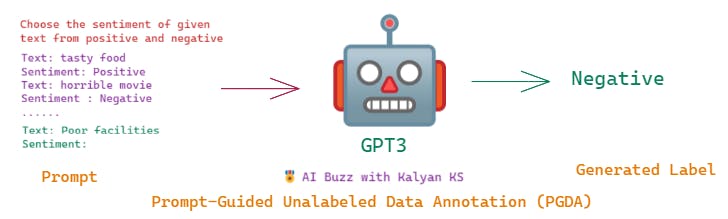

🌹Prompt-Guided Unlabeled Data Annotation (PGDA)

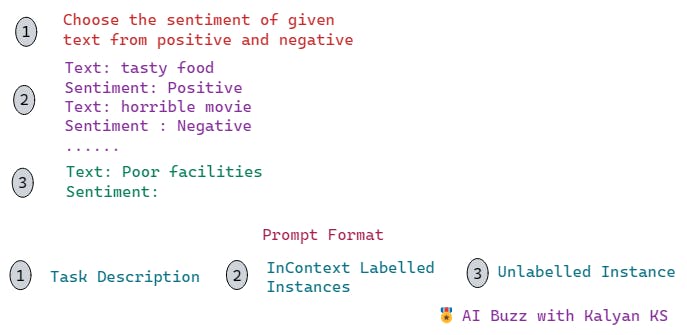

In this approach, the prompt is generated by concatenating task description, In context-labeled instances and unlabelled instance as shown in the figure

GPT3 model receives the prompt. Based on the task description and the given in-context labeled instances, the model understands the task and then predicts the label for the given unlabeled instance.

In this way, with just a few labeled instances in hand, you can annotate as many instances as you want using GPT3.

💡 How to choose the number of in-context labeled instances?

It can be one to any number you want. Increasing the number of in-context labeled instances enhances the quality of data annotation. This is because, as you increase the number of in-context labeled instances, the model can better understand the task and hence generates correct labels in most of the cases. However, increasing in-context labeled instances increases the cost of using GPT-3 and hence increases the data annotation cost also. So, depending on your budget you have to choose the number of in-context labeled instances.

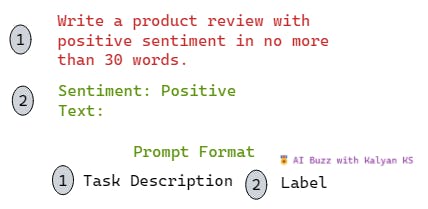

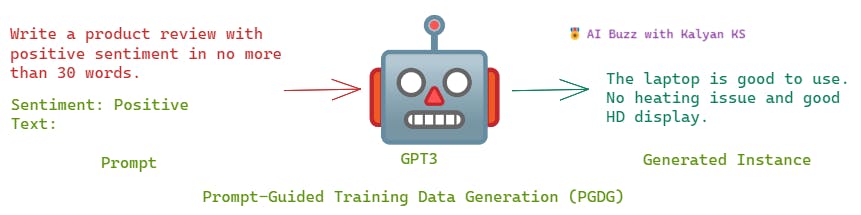

🌹Prompt-Guided Training Data Generation (PGDG)

In this approach, the prompt is generated by concatenating the task description and the label as shown in the figure.

Depending on the task description and the given label as prompt, the GPT3 model generates the instance.

In this way, without any labeled instances in hand, you can annotate as many instances as you want using GPT3.

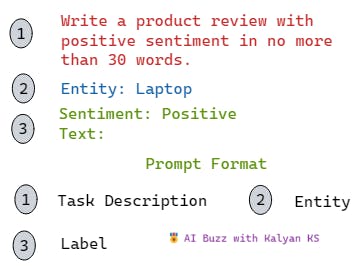

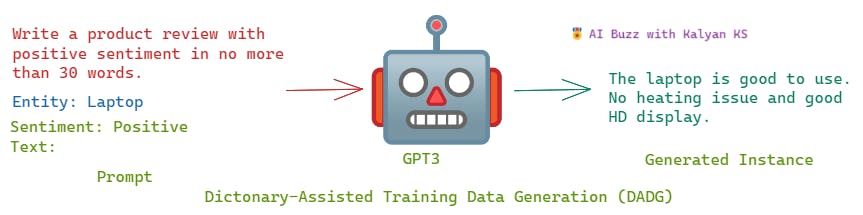

🌹Dictionary Assisted Training Data Generation (DADG)

In this approach, the prompt is generated by concatenating the task description, entity and label as shown in the figure.

Depending on the task description, entity and the given label as prompt, the GPT3 model generates the instance.

In this way, without any labeled instances in hand, you can annotate as many instances as you want using GPT3.

🌴Which approach is the best?

Once the data is annotated using GPT3, you can use the annotated data to fine-tune the smaller models like BERT and use them in production. In the previous section, you have seen the different approaches to using GPT3 for data annotation. Now it's time to discuss the pros and cons of each approach based on three criteria namely the cost of annotation, the time taken for annotation and the quality of annotation. Such a discussion helps you to know which approach to choose depending on the constraints.

Cost - The GPT3 usage is charged based on the number of tokens it processes. When compared to all three approaches, the PGDG approach is less expensive as the prompt in this approach includes only the task instruction and the label i.e., the least number of tokens out of all the three approaches. If you observe the prompts of the other two approaches, the prompt in the PGDA approach includes task description, in-context labeled instances and unlabeled instance while the prompt in the DGDA approach involves task description, entity and label.

💡 To conclude, PGDG is the most effective approach in terms of cost for using GPT3 as a data annotator.

Time - Similar to cost, the time taken for data annotation also depends on the number of tokens. In simple words, the approach that involves the least number of tokens takes the least amount of time for data annotation.

💡 To conclude, PGDG is the most effective approach in terms of time taken for data annotation using the GPT3 model.

Quality of data annotation - The quality of data annotation is evaluated based on the performance of the downstream model which is fine-tuned using the GPT3 annotated data. In general, the PGDA approach outperforms other approaches. This is because the prompt in PGDA includes in-context labeled instances along with task description. The presence of one or more in-context labeled instances allows the GPT3 model to understand more about the task and hence the model can generate more quality labels. Here more quality labels in the sense, GPT3 assigns correct labels to more instances compared to other approaches. You can refer to this paper, for more details regarding this.

💡 To conclude, PGDA is the most effective approach in terms of quality annotation using the GPT3 model.

🌴 What Next?

Check out the next blog post to learn how you can further improve the quality of GPT3 labeled data.

References

[1] Radford, A., Narasimhan, K., Salimans, T. and Sutskever, I., 2018. Improving language understanding by generative pre-training.

[2] Radford, A., Wu, J., Child, R., Luan, D., Amodei, D. and Sutskever, I., 2019. Language models are unsupervised multitask learners. OpenAI blog, 1(8), p.9.

[3] Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J.D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A. and Agarwal, S., 2020. Language models are few-shot learners. Advances in neural information processing systems, 33, pp.1877-1901.

[4] Bosheng Ding, Chengwei Qin, Linlin Liu, Lidong Bing, Shafiq Joty, Boyang Li, "Is GPT-3 a Good Data Annotator?"