Exploring ChatGPT - Overview, Training and Limitations

Open AI ChatGPT explained in simple terms

Introduction

Conversational AI is an area of Artificial Intelligence that focuses on developing tools like Chatbots which can interact with users just like humans. Conversational AI has received much attention in academia and industry due to its vast applications in the real world. Initially, chatbots generate responses based on a set of pre-defined rules. With the success of transformer-based pretrained language models in various NLP tasks, dialogue-oriented pretrained language models have been developed. Unlike rule-based chatbots, the chatbots based on these pretrained models can generate more natural responses as per the user inputs.

ChatGPT is an extraordinary chatbot model released by Open AI in November 2022. In no time, social media platforms are flooded with ChatGPT-related related posts.

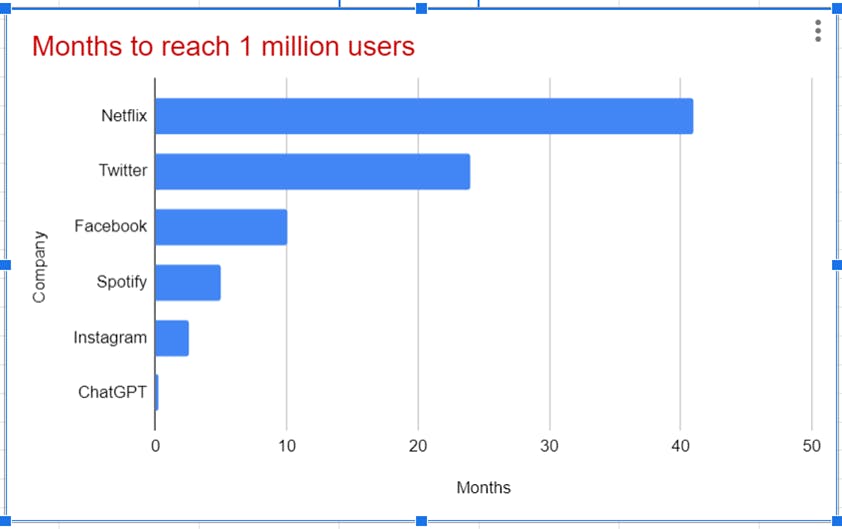

The figure illustrates how popular ChatGPT has become after its release. The number of ChatGPT users reached the 1 million mark within a week. Before the release of ChatGPT, we have other chatbot models like LaMDA from Google and BlendorBot from Meta. BlendorBot is based on the publicly available 175B parameter OPT model.

ChatGPT Overview

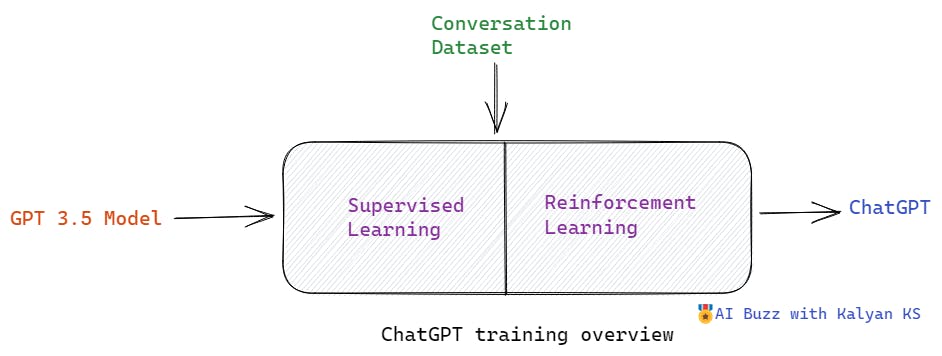

ChatGPT is a dialogue-oriented pretrained language developed by Open AI. ChatGPT is a decoder-based transformer model which uses a self-attention mechanism to understand the user inputs and then generates the responses accordingly. ChatGPT is initialized from GPT 3.5 model and further trained on a large conversation dataset using both supervised learning and reinforcement learning in multiple stages.

Due to training on conversational datasets, ChatGPT has acquired the ability to generate more natural and appropriate responses to user inputs.

ChatGPT Training

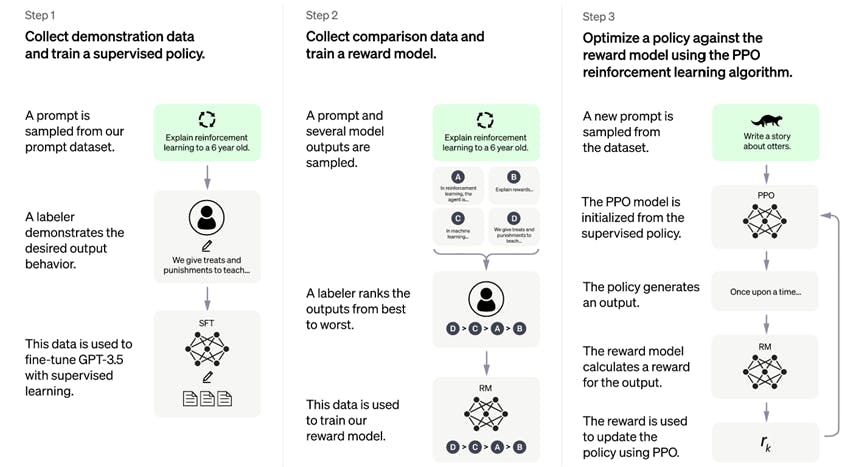

ChatGPT training is done on the conversation dataset using supervised learning and reinforcement learning. The entire ChatGPT training involves three steps, namely.

Adapting GPT 3.5 model to conversations using supervised learning

Training the reward model using the generated answers

Improving the model further using reinforcement learning.

(Image source: Open AI Chat GPT blog post)

Let us see each of these steps in a detailed way.

Step 1 - Adapting GPT 3.5 model to conversations using supervised learning.

The first step is to generate the conversation data using human AI trainers. First a human AI trainer randomly samples an input for which another human-AI trainer generates the answer. In this, a conversation dataset is generated entirely by human AI trainers.

Using this dataset, GPT 3.5 model is adapted to conversations using supervised learning. At the end of this step, GPT 3.5 model can generate answers for the given inputs.

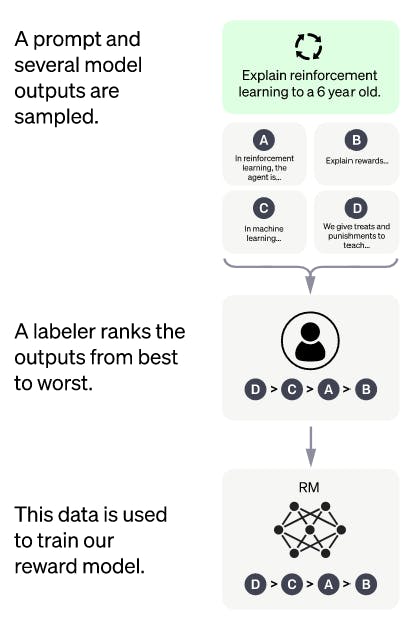

Step 2: Training a reward model

Step 1 involves training the GPT3.5 model using a human-generated conversation dataset based on supervised learning so that the model can generate answers. However, the answers generated by the model for the given input can range from best to worst. Again, human AI trainers are asked to rank these answers generated by the model. Now the reward model is trained using these human-ranked answers. At the end of step 2, the reward model will output scores for the generated answers, and these output scores (i.e., rewards) can be used for step 3, which involves training the model based on reinforcement learning.

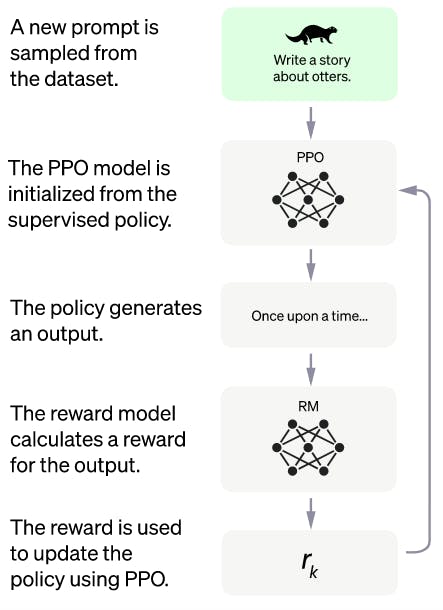

Step 3 Training the model using reinforcement learning

In this step, for each input, the PPO model generates a response. Here the model is initialized from the model obtained at the end of step 1. The reward model trained in Step 2 computes the score (reward) for the generated output. Now the model is updated based on the generated rewards using PPO (Proximal Policy Optimization). This process is repeated for several iterations to improve the generated answers.

In this way, GPT 3.5 model is upgraded to the ChatGPT model by fine-tuning it on human-generated conversations using supervised learning and reinforcement learning.

Limitations

Sensitive to prompts: The answer generated by ChatGPT model largely depends on the quality of the prompt input. For example, the model may generate an answer for a given prompt. For a slightly rephrased prompt, the model may claim that it does not know the answer. So, remember that good prompts only make the model generate the best possible answer.

Bias: In general, deep learning models are known to acquire the bias present in the training set. Here the bias can be based on gender, race, or location. ChatGPT is not an exception from this.

Incorrect answers: In some cases, the ChatGPT fails to answer easy questions also.